Hacker News broke our site – how Nginx and PageSpeed fixed the problem

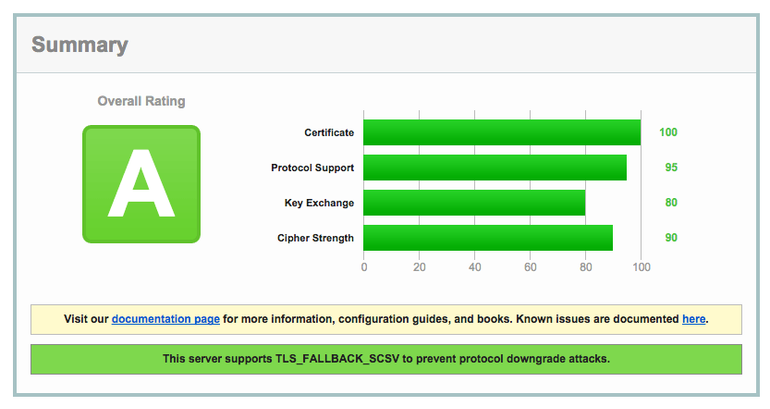

Following a surge of traffic from Hacker News which overloaded our servers, we re-built our hosting stack using the latest best practices for high performance browser networking resulting in 94/100+ scores on Google PageSpeed Insights, and A+ scores on SSL Labs. In this post we will share how we did it.

What happened?

After a big revamp of www.airport-parking-shop.co.uk in 2014, we wrote an article on how we built the accompanying mobile app in 2 weeks using the Ionic framework and posted a link to Hacker News on Wednesday, November 12th.

Within an hour our link had jumped to the second spot on the Hacker News homepage and, as a result, our site got bombarded for the next few hours.

Eventually, as the visitors grew in numbers, this all got a bit too much and things started falling apart despite our best efforts! Our new site simply couldn’t keep up and pages began to time out which very quickly lost us our spot on the Hacker News homepage.

Assessing the situation

The interest in our blog post was fantastic, but the unexpected surge in traffic had exposed some serious issues at our end, and had us rethinking the way we host our site.

We were using a conventional LAMP environment on a shared hosting platform and scoring in the high 70s on PageSpeed Insights.

Our recent switch to HTTPS, as prompted by Google’s inclusion of HTTPS into ranking factors, also didn’t help things and our implementation was far from optimised.

We looked at what went wrong and decided to, a) dramatically increase the load capacity of our site and b) push our Google PageSpeed score into the high 90s.

Ansible and Digital Ocean for testing and deployment

We decided to start with a bare CentOS server on Digital Ocean as they provide a simple, proven platform that will allow us to scale easily in the future. The ease of restoring droplets, creating snapshots and switching between OS images also meant we could test and restore very quickly.

Having previously worked with Ansible, this was also a no-brainer.

Ansible is an automation tool that helps you run commands on a server by means of a Playbook (think of this as a recipe for what to prepare on the server). Or, as Ansible puts it, “human-readable IT automation language”.

This allowed us to configure our new server environment in a well-documented way (by means of the playbook) that we could run over and over tweaking each and every bit of the environment without having to repeat ourselves.

Ansible would also allow us to deploy our site in the exact environment in a matter of minutes, should we ever decide to move to a different hosting provider. A development time investment well worth it!

Setting up Nginx & PHP

Airport Parking Shop had been running on Apache for the past 11 years and whilst none of us had any real experience with either Nginx or Litespeed, we simply felt that the raw performance gains from switching to one of the alternatives was well worth the learning curve.

When comparing Nginx and Litespeed, the latter would’ve been preferable for both its similarities to Apache and its outright speed, but ultimately we were nudged in the direction of Nginx for its vast community, resources and growing popularity.

Our first challenge, of course, was getting our Apache rules ported to Nginx. These have built up significantly over the years, but with some trial and error we were eventually able to figure them out and personally I now much prefer the Nginx config over that of Apache.

Our setup comprises NGINX & PHP-FPM with OPcache, and whilst we tested and liked HHVM, this simply felt a bit too bleeding edge and just didn’t sit well on our production environment.

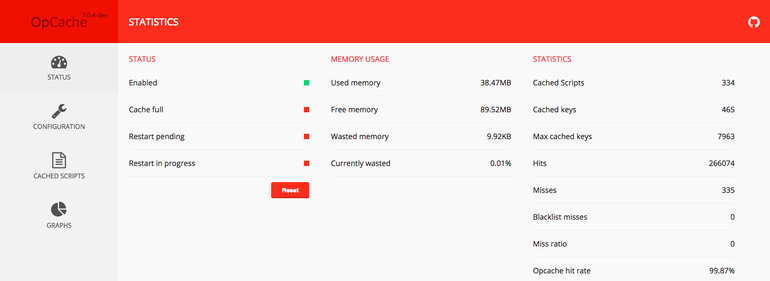

We built PHP 5.5 with OPcache from source and also enabled a very handy interface, called OpCacheGUI, for monitoring OpCache performance.

What about the caching?

As for caching, we added Nginx’s FastCGI Cache and created a setup roughly similar to this guide provided by rtCamp.

This also adds a very handy response header to check whether the page was served from cache or not.

# Enable cache HIT or MISS status in headers add_header rt-Fastcgi-Cache $upstream_cache_status;

As per many articles we explored the idea of serving our caches from memory using a tmpfs filesystem, in our case just the standard CentOS /dev/shm volume.

There’s a few things to consider when doing this though.

Firstly, whilst not a massive concern, restarting the system will obviously destroy the cache as it is in memory, and secondly, cache files will take up their exact size in system memory!

Whilst this shouldn’t be a problem on anything with more than 2GB of RAM, smaller servers should exercise caution. When using say, a 512MB Digital Ocean server, a 100MB cache will be taking up a 5th of your system memory. That’s a significant amount of memory and could be the difference between staying up or going down during unexpected traffic spikes.

In our case we really couldn’t notice too much of a difference even when load testing our site, so we simply stuck to good old disk storage. This could also be due to Digital Ocean using SSDs only and when using traditional storage you might see more of a significant increase in performance.

Optimising SSL

With our server configured, tested and running well, next we took on improving the SSL performance of our site.

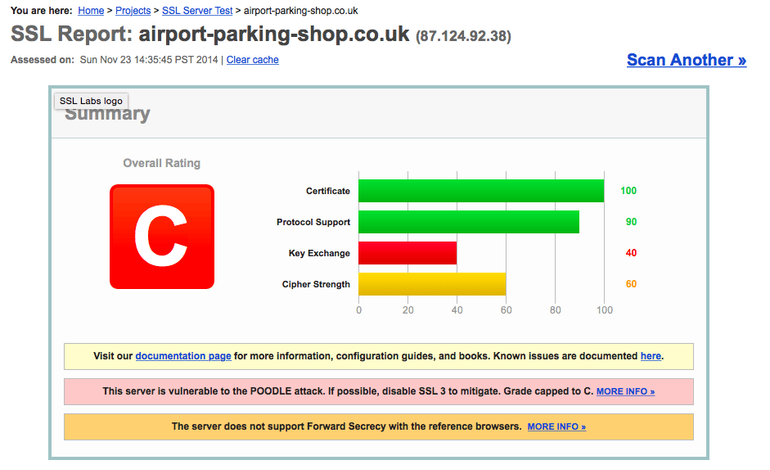

Our SSL implementation was quite basic and we didn’t score too well on the SSL Labs test.

Firstly, we did the basics like enabling HSTS, SSL session cache, increasing the SSL TTL etc.

# Enable HSTS add_header Strict-Transport-Security "max-age=31536000;";

We also added the Nginx SPDY module to enable the SPDY protocol and this really did help move things along.

In Google’s own words: “SPDY manipulates HTTP traffic, with particular goals of reducing web page load latency and improving web security.”

It’s worth noting that Google has officially stopped developing SPDY in favour of HTTP2, but don’t let this put you off using SPDY. It’s well developed and isn’t just going to stop working any time soon. Also HTTP2 isn’t quite ready yet and isn’t even enabled in Chrome (it can be enabled through Chrome flags), so SPDY really is the best option right now.

Finally, we enabled OCSP Stapling. In a nutshell OCSP is the process of the client (browser) contacting the Certificate Authority and checking the status of the SSL certificate. Now OCSP Stapling allows us, the certificate owner, to attach a signed, timestamped response from the Certificate Authority which saves the client from contacting the CA and thus sparing an extra round trip in networking.

This can shave off a few hundred milliseconds in the whole client server SSL negotiation and makes a very nice speed improvement for very little effort.

There’s a ton of resources on how to set this up, but essentially this is what the config looks like in the nginx.conf file:

# Enable OCSP Stapling ssl_stapling on; ssl_stapling_verify on; resolver 8.8.8.8 8.8.4.4 valid=60s; resolver_timeout 5s;

It’s worth noting that you will need to create certificate chains which include all your intermediate certificates, a fairly straightforward process, but slightly different for each certificate provider.

Adding PageSpeed module

So far all our efforts was focused on improving our hosting environment, so next we focused on our site and getting that PageSpeed score up.

We firstly did what we could to manually improve the site by moving some scripts to the bottom of pages or deferring non-essential ones.

We also implemented a very basic script to concatenate and minify our assets.

This all made a noticeable improvement, but the real number came with adding the PageSpeed module.

Google’s PageSpeed module is available for both Apache and Nginx, and whilst adding it to Apache is a fairly straightforward affair, the Nginx module needs to be added when building Nginx from source. That said, Google provides very thorough instructions on how to do this and with the help of Ansible this was a one-time task.

The PageSpeed module offers a huge number of configurable filters and the Core Filters (default setting) will provide a very good setup, although we tweaked it slightly by adding the following:

We explicitly disabled defer_javascript as this can cause issues with scripts needing to load in certain order. Of course the ideal would be to use script loaders like RequireJS, but we were dealing with essentially a 11 year site that’s had a front-end makeover.

pagespeed DisableFilters defer_javascript;

We also enabled collapse_whitespace and remove_comments to essentially minify HTML, lazyload_images to avoid loading images outside of the user’s viewport and a number of image filters to optimise image compression, conversion and resizing.

Here is a list of filters we enabled and configured:

pagespeed EnableFilters collapse_whitespace;

pagespeed EnableFilters insert_dns_prefetch;

pagespeed EnableFilters prioritize_critical_css;

pagespeed EnableFilters lazyload_images;

pagespeed EnableFilters local_storage_cache;

pagespeed EnableFilters make_google_analytics_async;

pagespeed EnableFilters extend_cache;

pagespeed EnableFilters remove_comments;

pagespeed EnableFilters elide_attributes;

pagespeed EnableFilters remove_quotes;

pagespeed EnableFilters trim_urls;

pagespeed EnableFilters rewrite_images;

pagespeed EnableFilters inline_images;

pagespeed EnableFilters recompress_images;

pagespeed EnableFilters convert_gif_to_png;

pagespeed EnableFilters convert_jpeg_to_progressive;

pagespeed EnableFilters recompress_jpeg;

pagespeed EnableFilters recompress_png;

pagespeed EnableFilters recompress_webp;

pagespeed EnableFilters strip_image_color_profile;

pagespeed EnableFilters strip_image_meta_data;

pagespeed EnableFilters jpeg_subsampling;

pagespeed EnableFilters convert_png_to_jpeg;

pagespeed EnableFilters resize_images;

pagespeed EnableFilters resize_rendered_image_dimensions;

pagespeed EnableFilters convert_jpeg_to_webp;

pagespeed EnableFilters convert_to_webp_lossless;

pagespeed ImageRecompressionQuality 50;

pagespeed JpegRecompressionQuality 50;

pagespeed JpegRecompressionQualityForSmallScreens 50;

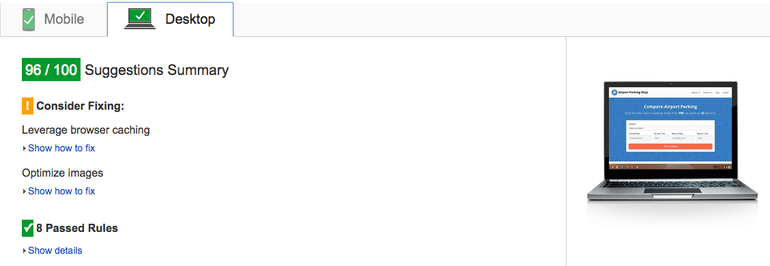

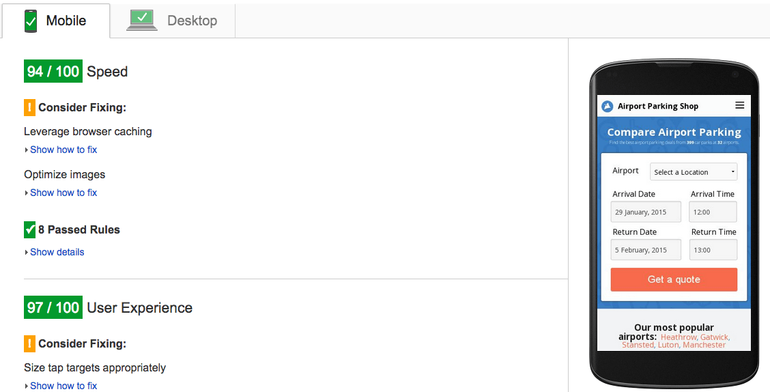

With PageSpeed set up we instantly increased our PageSpeed score from high 70’s to a very impressive 96 for desktop and 94 for mobile!

Now for the unfortunate downside of using the PageSpeed module. It’s an incredibly ‘heavy’ module and does significantly reduce the number of requests the server will be able to handle.

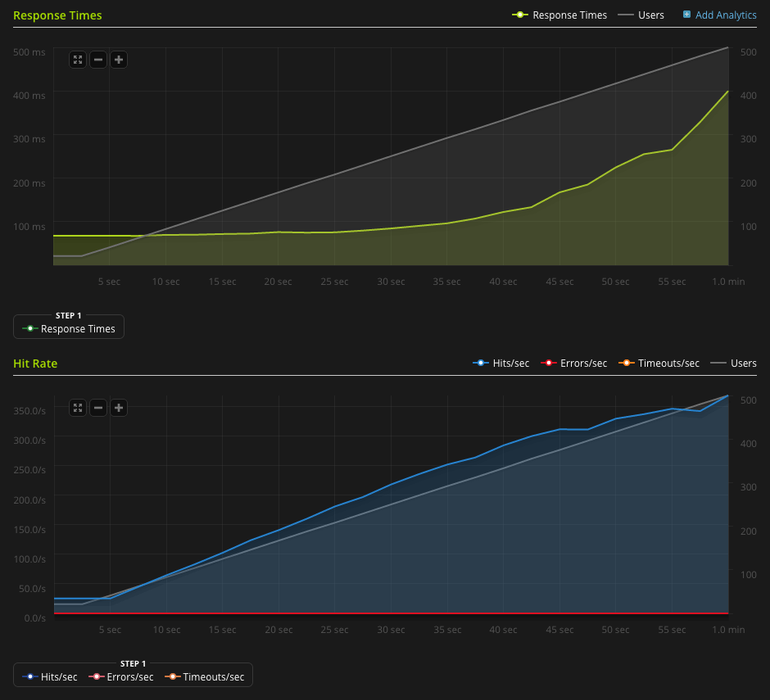

Here’s a comparison of Airport-Parking-Shop running without PageSpeed:

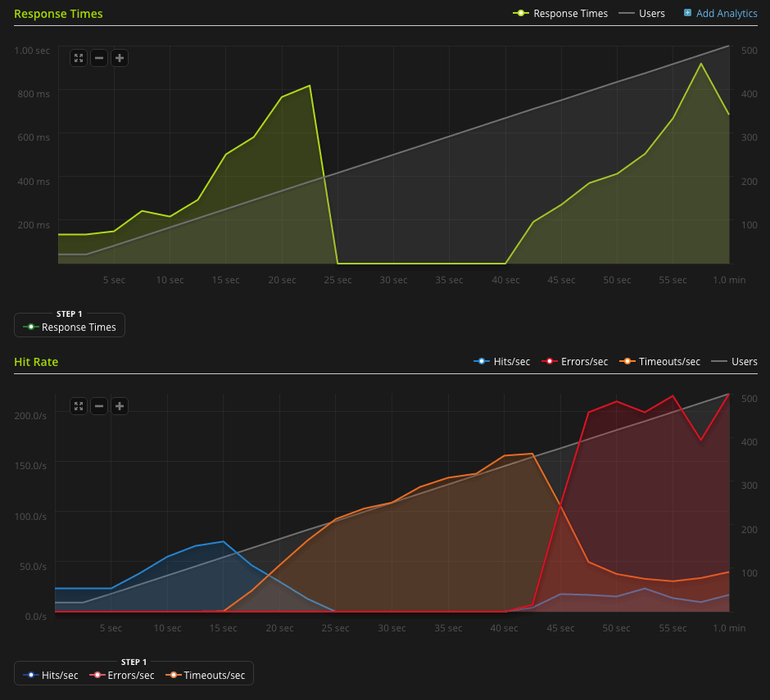

And running with PageSpeed:

So, what to make of this, well it’s really down to compromise. Do you want to be able to handle thousands of requests per second or serve up the best possible experience for your users? Of course in an ideal world you’d make all the improvements that the PageSpeed module applies manually without the help of the module and serve up a site with a PageSpeed score of 90+ to thousands of users per second. This however is probably not going to be necessary for anyone other than the really big sites with hundreds of thousands of users per day, so having PageSpeed do the hard work sits well with us.

Whilst the test with PageSpeed enabled looks pretty atrocious, we’re still able to handle significantly more requests than we ever did on our old Apache setup and this test is merely to illustrate the cost of PageSpeed’s brilliance. The response time timeout was set to 1 second, so the server would most likely have responded to the majority of failed requests given some more time.

Additional tweaks and conclusion

Some other notable performance tweaks and extras we implemented includes enabling Gzip on Nginx and setting expires headers on static assets, although PageSpeed will handle some of this also.

We disabled TCP Slow Start, a congestion control method, which has its uses, but ultimately hinders performance. An interesting article about TCP Slow Start and performance here.

We also enabled TCP IW10, which basically means increasing TCP’s initial window to 10 MSS (Maximum Segment Size) and thus reducing latency, and enabled query cache on our MySQL (MariaDB) server.

All these configurations and tweaks are the result of a month spent reading, researching and testing things that were very new to all of us and it has really taught us a lot.

Here is an overview of the steps we took to set up our server as per out Ansible playbook:

- 1. Start with server running bare CentOS 6.5

- 2. Create a Swap file – 2GB in our case

- 3. Configure the CentOS firewall via iptables

- 4. Install & configure MySQL (MariaDB)

- 5. Install 3rd party yum repos like Webtatic

- 6. Install all the required PHP5 modules. e.g. pfp-fpm, php-mysql etc.

- 7. Download Nginx source and any submodules e.g. ngx_pagespeed

- 8. Build Nginx

- 9. Configure PHP-FPM & Nginx (this includes adding PageSpeed filters etc.)

- 10. Clone project repo

- 11. Set TCP Initial Window size to 10 (iw10)

- 12. Disable TCP Slow Start

- 13. Install & configure both system timezone files and NTP

- 14. Start & enable all services. e.g. Nginx, MySQL, PHP-FPM

Whilst we are still far from having the perfect setup, we managed to take an 11 year old website, with a lot of spiderwebs and code smell, and optimise it to a fast loading modern-feeling website that puts user experience first on any platform.

We’ve seen a definitive increase in user engagement and conversion, and the time invested is paying off on a daily basis.

So, to conclude, if you’re considering improving your website performance and user experience I encourage you to get your hands dirty and start from scratch. Test, change, learn and improve!